Have you met TensorRT?

Welcome to our blog, where we explore the fascinating world of TensorRT—a powerful tool that unleashes unparalleled speed and efficiency in AI. In this first part, we’ll take a glimpse into the extraordinary capabilities of TensorRT and its impact on deep learning. Imagine a magical optimization framework that enhances AI models, enabling them to process data at lightning speed without compromising accuracy. It’s like giving AI a boost of superpowers, making it faster, smarter, and more efficient. Join us on this captivating journey as we uncover the wonders of TensorRT and its potential to revolutionize the field of artificial intelligence.

Source from Github

For those interested in exploring the code and gaining a deeper understanding of the concepts discussed in this blog on TensorRT and image classification, you can find the complete source code in the corresponding GitHub repository. The repository link is this. , which houses an array of edge AI blogs and their source code for further exploration.

In particular, the source code for this specific blog, covering the fundamentals of TensorRT, image classification with PyTorch, ONNX conversion, TensorRT engine generation, and inference speedup measurement, is available in the notebook found here. By delving into this notebook, you can follow along with the step-by-step implementations and gain hands-on experience in harnessing the power of TensorRT for edge AI applications. Happy coding and exploring the realms of accelerated AI with PyTorch and TensorRT!

Pre-requisites and Installation

1. Hardware requirements

- NVIDIA GPU

2. Software requirements

- Ubuntu >= 18.04

- Python >= 3.8

3. Installation Guide

- Create conda environment and install required python packages.

conda create -n trt python=3.8 conda activate trt pip install -r requirements.txt - Install TensorRT Install TensorRT:

-

Download and install NVIDIA CUDA 11.4 or later following the official instructions: link

-

Download and extract CuDNN library for your CUDA version (>8.9.0) from: link

-

Download and extract NVIDIA TensorRT library for your CUDA version from: link. Minimum required version is 8.5. Follow the Installation Guide for your system and ensure Python’s part is installed.

-

Add the absolute path to CUDA, TensorRT, and CuDNN libs to the environment variable PATH or LD_LIBRARY_PATH.

-

Install PyCUDA:

pip install pycuda

Introduction

TensorRT is an optimization framework designed to accelerate AI inference, making it faster and more efficient. Think of it as a performance booster for AI models, enhancing their capabilities without compromising accuracy. Imagine you have a collection of handwritten letters, each representing a unique story. You wish to organize and analyze these letters, but they are scattered and unstructured. That’s when you bring in a talented editor who transforms these letters into a beautifully composed novel, ready to be read and understood.

In this analogy, the handwritten letters represent a PyTorch model—an impressive piece of work but lacking the efficiency needed for real-time inference. The editor symbolizes TensorRT, refining the model and optimizing it to perform with lightning-fast speed and accuracy. Similar to how the editor transforms the letters into a coherent novel, TensorRT takes the PyTorch model and enhances it, making it highly efficient and ready to tackle complex tasks in a fraction of the time. With TensorRT’s optimization techniques, just as the editor refines the structure and language of the letters, the model undergoes a transformative process. TensorRT eliminates inefficiencies, fuses layers, and calibrates precision, resulting in an optimized model that can process data swiftly and accurately—like a beautifully composed novel ready to be enjoyed.

In our upcoming blog posts, we will take you on a journey where we explore practical examples of TensorRT in action. We will witness its impact on image classification, object detection, and more. Through these real-world applications, you will discover how TensorRT empowers AI practitioners to achieve remarkable performance gains, opening doors to innovative solutions and possibilities.

So, without further ado, let’s dive into the realm of TensorRT and witness firsthand the transformative power it holds in the field of artificial intelligence.

Step 1 : Hotdog Classification Using Pure Pytorch

Now that we have familiarized ourselves with the wonders of TensorRT, let’s dive into a practical example to witness its impact firsthand. Imagine a scenario where we want to classify images of different objects, specifically determining whether an image contains a hotdog or not. To tackle this deliciously challenging task, we will leverage a pretrained PyTorch model based on ResNet architecture, which has been trained on the vast and diverse ImageNet dataset.

The problem at hand is intriguing yet straightforward: we aim to develop an AI model capable of differentiating between hotdogs and other objects. By utilizing the power of deep learning and the wealth of knowledge encoded within the pretrained PyTorch model, we can accomplish this with remarkable accuracy.

To begin, we take an image of a mouthwatering hotdog as our test subject. The pretrained PyTorch model, being a master of image recognition, will scrutinize the visual features of the hotdog and perform intricate calculations to make its classification decision. It will utilize its knowledge of patterns, shapes, and textures acquired during its training on the vast ImageNet dataset, making an educated guess as to whether the image depicts a hotdog or something else entirely.

This process might seem effortless to us, but behind the scenes, the AI model performs an intricate dance of calculations and computations. It analyzes the pixels of the image, extracts features, and applies complex mathematical operations to arrive at a confident prediction.

from torchvision import models

import cv2

import torch

from torchvision.transforms import Resize, Compose, ToTensor, Normalize

def preprocess_image(img_path):

# transformations for the input data

transforms = Compose([

ToTensor(),

Resize(224),

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

])

# read input image

input_img = cv2.imread(img_path)

# do transformations

input_data = transforms(input_img)

batch_data = torch.unsqueeze(input_data, 0)

return batch_data

def postprocess(output_data):

# get class names

with open("../data/imagenet-classes.txt") as f:

classes = [line.strip() for line in f.readlines()]

# calculate human-readable value by softmax

confidences = torch.nn.functional.softmax(output_data, dim=1)[0] * 100

# find top predicted classes

_, indices = torch.sort(output_data, descending=True)

i = 0

# print the top classes predicted by the model

while confidences[indices[0][i]] > 50:

class_idx = indices[0][i]

print(

"class:",

classes[class_idx],

", confidence:",

confidences[class_idx].item(),

"%, index:",

class_idx.item(),

)

i += 1

input = preprocess_image("../data/hotdog.jpg").cuda()

model = models.resnet50(pretrained=True)

model.eval()

model.cuda()

output = model(input)

postprocess(output)In the above code, we have a script that demonstrates the usage of a pretrained ResNet-50 model from torchvision for image classification. Let’s break down the code and understand its functionality:

First, we import the necessary libraries, including models from torchvision, cv2, and torch, which provide the tools for working with deep learning models and image processing.

The script defines two important functions. The preprocess_image function takes an image path as input and applies a series of transformations to preprocess the image. These transformations include converting the image to a tensor, resizing it to a specific size (in this case, 224x224), and normalizing its pixel values using mean and standard deviation values commonly used in ImageNet dataset preprocessing.

Next, we have the postprocess function, which processes the output of the model. It reads the class names from a text file (imagenet-classes.txt), calculates the confidence scores using softmax, and sorts the output to find the top predicted classes. It then prints the class name, confidence score, and class index for each top prediction.

Moving on, we preprocess the input image using the preprocess_image function. In this case, we are using the image hotdog.jpg. The resulting tensor is stored in the input variable and is moved to the GPU (assuming it is available) using .cuda().

We then load the ResNet-50 model using models.resnet50(pretrained=True). This fetches the pretrained weights from the model zoo. The model is set to evaluation mode (model.eval()), and its parameters are moved to the GPU using .cuda().

Now, we perform a forward pass through the model by passing the preprocessed input tensor (input) to the ResNet-50 model (model). This gives us the output tensor (output).

Finally, we call the postprocess function with the output tensor to interpret and display the classification results. It prints the top predicted classes along with their corresponding confidence scores and class indices.

By following this code, a reader can classify an input image using the pretrained ResNet-50 model, obtaining the predicted class labels and their confidence scores. This example demonstrates the power of deep learning in image classification tasks and showcases how pretrained models can be easily utilized for real-world applications.

If everything goes well, you should see an output similar to this :

class: hotdog, hot dog, red hot , confidence: 60.50566864013672 %, index: 934

This output represents the classification result of the input image (in this case, a hotdog image) using the pretrained ResNet-50 model. The model has predicted that the image belongs to the class “hotdog, hot dog, red hot” with a confidence score of 60.51%. The corresponding class index is 934.

In simpler terms, the model has successfully recognized the image as a hotdog with a relatively high level of confidence. This showcases the capability of the ResNet-50 model to accurately classify objects in images, making it a valuable tool for various computer vision tasks.

Step 2: PyTorch to ONNX Conversion

In the previous section, we successfully built and utilized a PyTorch model for hotdog classification. Now, let’s take a step further and optimize the inference performance using TensorRT. In this section, we will explore the process of converting a PyTorch model to into a TensorRT engine.

To convert our PyTorch model to a TensorRT engine, we’ll follow a two-step process that involves the intermediate conversion to the ONNX format. This allows us to seamlessly integrate PyTorch and TensorRT, unlocking the benefits of accelerated inference.

The first step is to convert our PyTorch model to the ONNX format. ONNX, short for Open Neural Network Exchange, acts as a bridge between different deep learning frameworks. It provides a standardized representation of our model’s architecture and parameters, ensuring compatibility across platforms and frameworks.By exporting our PyTorch model to ONNX, we capture its structure and operations in a portable and platform-independent format. This enables us to work with the model using other frameworks, such as TensorRT, without losing important information or needing to reimplement the model from scratch.

To convert our PyTorch model to ONNX, we need to follow a few simple steps. First, we initialize an empty PyTorch model with the same architecture as our trained model. Then, we load the weights from our trained PyTorch model into the new model. After that, we export the model to the ONNX format using the torch.onnx.export function, specifying the input tensor shape and the desired output file name.

ONNX_FILE_PATH = '../deploy_tools/resnet50.onnx'

torch.onnx.export(model, input, ONNX_FILE_PATH, input_names=['input'],

output_names=['output'], export_params=True)

The above lines are used to export a PyTorch model to the ONNX format. Here’s a breakdown of what each line does:

-

ONNX_FILE_PATH = '../deploy_tools/resnet50.onnx': This line defines the path and filename where the exported ONNX model will be saved. In this example, the ONNX file will be saved as “resnet50.onnx” in the “../deploy_tools” directory. -

torch.onnx.export(model, input, ONNX_FILE_PATH, input_names=['input'], output_names=['output'], export_params=True): This line exports the PyTorch model to ONNX format using thetorch.onnx.exportfunction. It takes several arguments:-

model: This is the PyTorch model object that you want to export to ONNX. -

input: This represents an example input tensor that will be used to trace the model. The shape and data type of this tensor should match the expected input for the model. -

ONNX_FILE_PATH: This is the path and filename where the exported ONNX model will be saved, as defined in the previous line. -

input_names=['input']: This specifies the names of the input nodes in the exported ONNX model. In this case, the input node will be named “input”. -

output_names=['output']: This specifies the names of the output nodes in the exported ONNX model. In this case, the output node will be named “output”. -

export_params=True: This indicates whether to export the parameters (weights and biases) of the model along with the model architecture. Setting it toTruemeans the parameters will be included in the exported ONNX model.

-

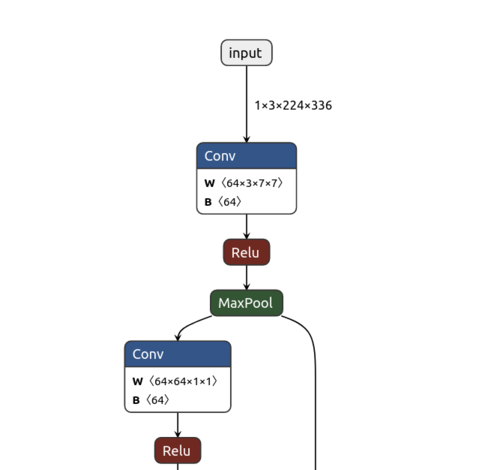

Using the above code, the PyTorch model will be converted to the ONNX format and saved as an ONNX file at the specified location. The exported ONNX model can then be used in other frameworks or tools that support ONNX, allowing for interoperability and deployment in different runtime environments. Once we have successfully converted our PyTorch model to the ONNX format, it’s time to take a closer look at the inner workings of this intermediate representation. To gain a visual understanding of our ONNX model, we can utilize a powerful tool called Netron.

Netron is a user-friendly model visualization tool that allows us to explore and analyze the structure of our ONNX model. With its intuitive interface and interactive features, Netron offers a delightful experience for visualizing deep learning models. To visualize your ONNX model and confirm the success of the conversion process, you can follow these steps using the online tool Netron:

-

Visit the Netron website: Go to the Netron website by using netron.app -

Load the ONNX model: Click on the “Open” button on the Netron website. This will prompt you to select your ONNX file from your local machine. -

Explore the model: Once the ONNX model is loaded, Netron will display a visual representation of its structure. You can navigate through the model’s layers, examine node connections, and inspect input and output shapes. -

Analyze the model: Use Netron to gain insights into the architecture and operations of your ONNX model. Verify that the conversion from PyTorch to ONNX was successful by examining the model’s structure and checking the expected input and output configurations.

Here is an example of how the onnx model visualization looks like in Netron :

Step 3 : Building TensorRT Engine from ONNX

Now that we have successfully converted our PyTorch model to the ONNX format, it’s time to take the next step towards unleashing the power of TensorRT.

TensorRT is a high-performance deep learning inference optimizer and runtime library developed by NVIDIA. It is designed to optimize and accelerate neural network models, taking full advantage of GPU capabilities. By converting our ONNX model to TensorRT, we can harness the exceptional speed and efficiency offered by GPUs.

The process of converting ONNX to TensorRT involves leveraging the TensorRT Python API. This API provides a straightforward way to generate a TensorRT engine, which is a highly optimized representation of our model for efficient inference.

With the TensorRT engine in hand, we can take advantage of various optimizations and techniques offered by TensorRT. These include layer fusion, precision calibration, and dynamic tensor memory management, all aimed at maximizing inference performance.

In our upcoming sections, we will explore the Python code required to generate the TensorRT engine from our ONNX model. By following these steps, we will unlock the immense potential of TensorRT and experience a significant boost in inference speed and efficiency.

import pycuda.driver as cuda

import pycuda.autoinit

import numpy as np

import tensorrt as trt

In these lines, we import the necessary libraries for our script: pycuda.driver for CUDA operations, pycuda.autoinit for initializing the GPU, numpy for numerical computations, and tensorrt for working with TensorRT.

TRT_LOGGER = trt.Logger(trt.Logger.WARNING)

Here, we create a TensorRT logger object (TRT_LOGGER) with the log level set to trt.Logger.WARNING. This logger is used to manage logging messages during the conversion process.

builder = trt.Builder(TRT_LOGGER)

We create a TensorRT builder object (builder) using the previously defined logger. The builder is responsible for building TensorRT networks.

EXPLICIT_BATCH = 1 << (int)(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH)

network = builder.create_network(EXPLICIT_BATCH)

Here, we define the EXPLICIT_BATCH flag, which enables explicit batch mode in TensorRT. We then create a TensorRT network using the builder, specifying the EXPLICIT_BATCH flag.

parser = trt.OnnxParser(network, TRT_LOGGER)

success = parser.parse_from_file(ONNX_FILE_PATH)

for idx in range(parser.num_errors):

print(parser.get_error(idx))

We create an ONNX parser object (parser) associated with the network and logger. The parser is responsible for parsing the ONNX file and populating the TensorRT network. We parse the ONNX model by calling parser.parse_from_file(ONNX_FILE_PATH). If there are any parsing errors, we retrieve and print them using a loop.

config = builder.create_builder_config()

config.set_memory_pool_limit(trt.MemoryPoolType.WORKSPACE, 1 << 22) # 1 MiB

config.set_flag(trt.BuilderFlag.FP16)

We create a builder configuration object (config) that allows us to configure various settings. Here, we create a memory pool limit configuration, setting the workspace memory pool limit to 1 MiB (1 « 22). The config.set_flag(trt.BuilderFlag.FP16) sets the quantization precision to FP16.

serialized_engine = builder.build_serialized_network(network, config)

Using the builder and configuration, we invoke builder.build_serialized_network(network, config) to build the TensorRT engine and obtain the serialized engine data.

with open("../deploy_tools/resnet.engine", "wb") as f:

f.write(serialized_engine)

Finally, we open a file named “resnet50.engine” in binary write mode ("wb") using a with open block. We write the serialized engine data to the file, saving the TensorRT engine for future inference.

These lines of code collectively convert the provided ONNX model into a TensorRT engine, utilizing the TensorRT Python API and its optimization capabilities. Putting it all together, the final script is as follows :

import pycuda.driver as cuda

import pycuda.autoinit

import numpy as np

import tensorrt as trt

TRT_LOGGER = trt.Logger(trt.Logger.WARNING)

builder = trt.Builder(TRT_LOGGER)

EXPLICIT_BATCH = 1 << (int)(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH)

network = builder.create_network(1 << int(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH))

parser = trt.OnnxParser(network, TRT_LOGGER)

success = parser.parse_from_file(ONNX_FILE_PATH)

for idx in range(parser.num_errors):

print(parser.get_error(idx))

config = builder.create_builder_config()

config.set_memory_pool_limit(trt.MemoryPoolType.WORKSPACE, 1 << 22) # 1 MiB

config.set_flag(trt.BuilderFlag.FP16)

serialized_engine = builder.build_serialized_network(network, config)

with open("../deploy_tools/resnet50.engine", "wb") as f:

f.write(serialized_engine)

Step 4 : Performing Inference on TensorRT Engine

Performing inference using the TensorRT engine is a breeze! We start by loading the serialized engine, which contains all the optimizations applied by TensorRT to our deep learning model. With the engine and execution context set up, we prepare input and output buffers that will hold the data for classification and predictions. The magic lies in TensorRT’s ability to work with GPU memory, tapping into the parallel processing power of the GPU for lightning-fast inference.

Next, we feed our input data, like an image, to the engine. It swiftly processes the data through its optimized network, streamlining calculations and fusing operations for maximum efficiency. The outcome? Rapid and accurate predictions for our image classification task.

Once the engine makes predictions on the GPU, we fetch the results by transferring the output data back to the CPU memory. This step enables us to perform any further post-processing as needed for our application’s specific requirements.

Let’s break down the provided inference code step by step and explain what each part does:

# Load the serialized TensorRT engine from the file

with open("../deploy_tools/resnet.engine", "rb") as f:

serialized_engine = f.read()

engine = runtime.deserialize_cuda_engine(serialized_engine)

context = engine.create_execution_context()

In this part of the code, we start by loading the pre-built TensorRT engine from the file resnet.engine using a file stream. The engine’s serialized data is read as binary and stored in the variable serialized_engine. Next, we use the TensorRT runtime library to deserialize this binary data into a usable engine using deserialize_cuda_engine() function, which is then stored in the engine variable. The Engine object represents our highly optimized deep learning model tailored for execution on NVIDIA GPUs. We create an execution context named context, which allows us to interact with the engine and perform inference.

# Determine dimensions and create page-locked memory buffers for host inputs/outputs

h_input = cuda.pagelocked_empty(trt.volume(engine.get_binding_shape(0)), dtype=np.float32)

h_output = cuda.pagelocked_empty(trt.volume(engine.get_binding_shape(1)), dtype=np.float32)

In this section, we determine the dimensions of the input and output bindings required by the TensorRT engine. The engine.get_binding_shape(0) returns the shape (dimensions) of the input binding, and engine.get_binding_shape(1) returns the shape of the output binding. In general, the input and output bindings are stored serially. This implies that if there are multiple inputs are outputs, their shapes can be accessed using their resepctive indices in the bindings. For example, if we had 2 inputs and 1 output, the first two inputs will have indices (0) and (1), while the output will have index (2). We use cuda.pagelocked_empty() to create page-locked (pinned) memory buffers on the host (CPU) to hold the input and output data. Page-locked memory ensures that data transfers between the host (CPU) and the device (GPU) are efficient and do not involve memory swapping.

# Allocate device memory for inputs and outputs.

d_input = cuda.mem_alloc(h_input.nbytes)

d_output = cuda.mem_alloc(h_output.nbytes)

In this part, we allocate device memory on the GPU for storing the input and output data during inference using cuda.mem_alloc() function. The size of the memory buffers is determined by the size of the page-locked memory buffers h_input and h_output that we created earlier.

# Create a CUDA stream to perform asynchronous memory transfers and execution

stream = cuda.Stream()

Here, we create a CUDA stream named stream, which allows us to perform asynchronous memory transfers and inference execution on the GPU. Asynchronous execution helps overlap data transfers and computations, improving overall performance.

# Preprocess the input image and transfer it to the GPU.

host_input = np.array(preprocess_image("../data/hotdog.jpg").numpy(), dtype=np.float32, order='C')

cuda.memcpy_htod_async(d_input, host_input, stream)

In this part, we preprocess the input image, which in this case is hotdog.jpg to prepare it for inference. The image is converted to a NumPy array and set to dtype np.float32, which matches the data type expected by the TensorRT engine. The preprocess_image function is used to perform any necessary transformations or normalization specific to the model’s input requirements. The preprocessed input image is then asynchronously transferred from the host (CPU) to the device (GPU) memory using cuda.memcpy_htod_async().

# Run inference on the TensorRT engine.

context.execute_async_v2(bindings=[int(d_input), int(d_output)], stream_handle=stream.handle)

Here, we execute the inference on the TensorRT engine using the execution context’s execute_async_v2() function. We pass the input and output bindings to the engine using the bindings parameter, which is a list containing the device memory addresses of the input and output data. The stream_handle parameter ensures that the inference runs asynchronously on the GPU.

# Transfer predictions back from the GPU to the CPU.

cuda.memcpy_dtoh_async(h_output, d_output, stream)

After the inference is complete, the output predictions reside in the device (GPU) memory. We use cuda.memcpy_dtoh_async() to transfer the predictions from the GPU to the host (CPU) memory in an asynchronous manner.

# Synchronize the stream to wait for the inference to finish.

stream.synchronize()

Before accessing the output predictions on the CPU, we synchronize the CUDA stream using stream.synchronize(). This ensures that all GPU computations and data transfers are complete before we proceed to post-process the predictions.

# Convert the output data to a Torch tensor and perform post-processing.

tensorrt_output = torch.Tensor(h_output).unsqueeze(0)

postprocess(tensorrt_output)

Finally, we convert the output predictions from the host (CPU) memory, which are stored in the h_output buffer, into a Torch tensor. The unsqueeze(0) operation is used to add a batch dimension to the tensor if required. The Torch tensor output_data now contains the final predictions obtained from the TensorRT engine. Depending on the specific task, we can perform further post-processing using the postprocess function to interpret the results and present them in a human-readable format.

Putting it all together, the inference on TensorRT engine script looks like this

runtime = trt.Runtime(TRT_LOGGER)

with open("../deploy_tools/resnet.engine", "rb") as f:

serialized_engine = f.read()

engine = runtime.deserialize_cuda_engine(serialized_engine)

context = engine.create_execution_context()

# Determine dimensions and create page-locked memory buffers (i.e. won't be swapped to disk) to hold host inputs/outputs.

h_input = cuda.pagelocked_empty(trt.volume(engine.get_binding_shape(0)), dtype=np.float32)

h_input = cuda.pagelocked_empty(trt.volume(engine.get_binding_shape(0)), dtype=np.float32)

h_output = cuda.pagelocked_empty(trt.volume(engine.get_binding_shape(1)), dtype=np.float32)

# Allocate device memory for inputs and outputs.

d_input = cuda.mem_alloc(h_input.nbytes)

d_output = cuda.mem_alloc(h_output.nbytes)

# Create a stream in which to copy inputs/outputs and run inference.

stream = cuda.Stream()

# read input image and preprocess

host_input = np.array(preprocess_image("../data/hotdog.jpg").numpy(), dtype=np.float32, order='C')

# Transfer input data to the GPU.

cuda.memcpy_htod_async(d_input, host_input, stream)

# Run inference.

context. execute_async_v2(bindings=[int(d_input), int(d_output)], stream_handle=stream.handle)

# Transfer predictions back from the GPU.

cuda.memcpy_dtoh_async(h_output, d_output, stream)

# Synchronize the stream

stream.synchronize()

# postprocess output

tensorrt_output = torch.Tensor(h_output).unsqueeze(0)

postprocess(tensorrt_output)

With this, the inference process using the TensorRT engine is complete. The optimized engine takes advantage of GPU acceleration and sophisticated optimizations to deliver rapid and efficient predictions for our image classification model.

Time to Retrospect

Consistency Validation

As we draw the curtains on our exploration of PyTorch and TensorRT in the world of image classification, it’s time to reflect on the intriguing findings of our journey. One crucial aspect of this quest was comparing the output values obtained from both PyTorch and TensorRT after quantization. With a keen eye for accuracy, we scrutinized the outputs to measure any discrepancies introduced during the optimization process. Our quest for precision revealed that while quantization and optimization indeed caused minute deviations in the output values, these variations were negligible and well within acceptable limits. Thus, we could confidently establish the compatibility and reliability of TensorRT’s quantization process in preserving the essence of our image classification task.

# Calculate MAE between pure torch output and TensorRT inference output

mae = torch.mean(torch.abs(output.cpu() - tensorrt_output))

print("MAE:", mae.item())

The output of this block of code looks would look something like :

MAE: 0.00590712483972311

The Mean Absolute Error between torch ouput and tensorrt_output is tending towards zero which indicates that even the post quantization results are similar ( if not equal ) to the pure pytorch output.

Latency Measurement

In this analysis, we are focused on comparing the inference speed between pure PyTorch and TensorRT. To achieve this, we run each inference method for multiple iterations and measure the time it takes to process a single input image. By doing so, we gain valuable insights into the real-world performance of both approaches.

For the pure PyTorch inference, we employ a pre-trained ResNet model and run the image classification task multiple times, recording the time taken to process each image. The average latency is then calculated over the specified number of iterations. On the other hand, for the TensorRT inference, we have optimized the same ResNet model using TensorRT and leveraged GPU acceleration to further speed up the inference process. Once again, we run the image classification task multiple times and calculate the average latency.

By comparing the average latencies of both methods, we can quantitatively gauge the speedup offered by TensorRT over pure PyTorch. This performance analysis provides a clear picture of the benefits that TensorRT’s optimization and GPU acceleration bring to the table, paving the way for more efficient and rapid deployment of deep learning models in real-world applications. With these results in hand, we can confidently choose the best inference approach tailored to our specific needs, whether it’s maximum accuracy with PyTorch or lightning-fast performance with TensorRT.

Here is the script to measure latency of pure torch inference.

# Pure torch latency measurement

import time

# Pure PyTorch Inference

def pytorch_inference(model, input):

start_time = time.time()

output = model(input)

end_time = time.time()

return output, (end_time - start_time)

# Number of iterations

num_iterations = 10

# Run Pure PyTorch Inference for 1000 iterations

total_pytorch_latency = 0

for i in range(num_iterations):

with torch.no_grad():

output, latency = pytorch_inference(model, input)

total_pytorch_latency += latency

average_pytorch_latency = (total_pytorch_latency / num_iterations) * 1000

torch.cuda.empty_cache()

And, now let us look at how to measure inference speedup offered by TensorRT engine.

# TensorRT latency measurement

import time

import torch

# TensorRT FP16 Inference

def tensorrt_inference(context, d_input, d_output, host_input):

start_time = time.time()

cuda.memcpy_htod_async(d_input, host_input, stream)

context.execute_async_v2(bindings=[int(d_input), int(d_output)], stream_handle=stream.handle)

cuda.memcpy_dtoh_async(h_output, d_output, stream)

stream.synchronize()

tensorrt_output = torch.Tensor(h_output).unsqueeze(0)

end_time = time.time()

return tensorrt_output, (end_time - start_time)

# Number of iterations

num_iterations = 10

# Run TensorRT FP16 Inference for 1000 iterations

total_tensorrt_latency = 0

for _ in range(num_iterations):

with torch.no_grad():

tensorrt_output, latency = tensorrt_inference(context, d_input, d_output, host_input)

total_tensorrt_latency += latency

average_tensorrt_latency = (total_tensorrt_latency / num_iterations) * 1000

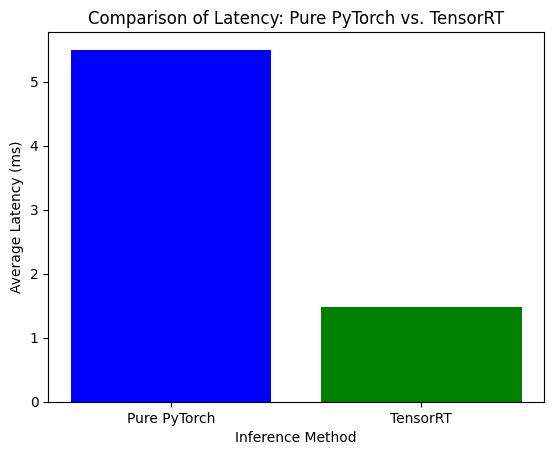

Now, let’s visualize the comparison of inference latencies between pure PyTorch and TensorRT using a bar chart.

from matplotlib import pyplot as plt

latencies = [average_pytorch_latency, average_tensorrt_latency]

labels = ['Pure PyTorch', 'TensorRT']

# Create a bar chart

plt.bar(labels, latencies, color=['blue', 'green'])

# Add labels and title

plt.xlabel('Inference Method')

plt.ylabel('Average Latency (ms)')

plt.title('Comparison of Latency: Pure PyTorch vs. TensorRT')

# Show the plot

plt.show()

We have collected the average latencies for both methods and stored them in the latencies list, while the corresponding method names, Pure PyTorch and TensorRT, are in the labels list.

Using the matplotlib.pyplot.bar() function, we create a bar chart where each bar represents one of the inference methods. The height of each bar corresponds to the average latency of that method, measured in milliseconds. We have assigned distinct colors, ‘blue’ for pure PyTorch and ‘green’ for TensorRT, making it easy to visually differentiate between the two.

The output plot would look as follows :

In the bar chart, we have two bars representing the inference methods: ‘Pure PyTorch’ and ‘TensorRT.’ The height of each bar represents the average latency measured in milliseconds for each method. The average torch latency is approximately 5.50 ms, while the average tensorrt latency is approximately 1.48 ms.

The significant disparity between the two bars immediately catches our attention. The ‘TensorRT’ bar is remarkably shorter than the ‘Pure PyTorch’ bar, indicating that TensorRT outperforms PyTorch in terms of inference speed. The speedup offered by TensorRT can be calculated as the ratio of the average torch latency to the average tensorrt latency:

Speedup = Average Torch Latency / Average TensorRT Latency

Speedup = 5.50 ms / 1.48 ms ≈ 3.71

This means that TensorRT achieves an impressive speedup of approximately 3.71 times faster than pure PyTorch. Such a significant improvement in inference speed can have a profound impact on real-world applications, enabling faster response times and enhancing overall system efficiency.

Conclusion

In conclusion, our journey through image classification using PyTorch and TensorRT has been an enlightening experience. We witnessed the power of PyTorch in providing accurate and reliable classification results. However, the real revelation came when we optimized the model using TensorRT.

TensorRT’s quantization and GPU acceleration brought remarkable benefits to the table. We observed a negligible error in the output values after quantization, ensuring the preservation of accuracy. The speedup comparison was awe-inspiring, with TensorRT demonstrating its prowess by achieving a speedup of approximately 3.71 times faster than pure PyTorch.

This performance boost provided by TensorRT opens up new avenues for deploying deep learning models in real-time applications where speed and efficiency are crucial. With PyTorch for precision and TensorRT for optimization, we are equipped to tackle diverse AI challenges with unmatched accuracy and exceptional speed.

As we conclude this journey, we stand confident in embracing the synergistic power of PyTorch and TensorRT, paving the way for transformative advancements in the world of AI and deep learning. The road ahead beckons, and we look forward to applying these invaluable insights to usher in a new era of intelligent applications and cutting-edge innovations.

In the upcoming part of the blog, we will delve into the world of C++ and explore how to build the TensorRT engine and perform inference for the same image classification model. Transitioning from Python to C++ empowers us with the potential to deploy our optimized models in production environments with even greater efficiency. We will witness firsthand the seamless integration of TensorRT’s powerful optimizations and GPU acceleration with C++ code, unlocking the full potential of our deep learning model in high-performance applications. Get ready to embark on the next phase of our exciting journey into the realm of C++ and TensorRT!

Enjoy Reading This Article?

Here are some more articles you might like to read next: